Homeruns Are King

Summary

It’s a Tuesday night, the Cubs game is postponed, and I should either be working or doing homework. Instead, here I am exploring homeruns in baseball because it is way more fun. I’d argue that aside from seeing something historical (no hitter/perfect game), most fans want to see home runs when they go to games.

In this analysis, I perform some data cleanup to calculate raw numbers from the %’s that fangraphs provides and shift around some data to build a predictive model to predict homerun totals. The inputs to this model will be the players previous season statistics. Homeruns are becoming increasingly important in baseball, and if you read my analysis on walks, you’d remember that they are being hit at an alarming rate. Teams are building their teams around power hitters, but want to be fiscally conscious. So if a team can identify a future homerun hero and save some cash, it’s a major win.

Data Support

To begin, let’s take a look at the league leader and league average in homeruns over the last 18 seasons.

Take out Barry Bond’s 73 home run season in 2001 (which is crazy!) and 2017 had the highest single season HR total by a player over the entire time frame analyzed. The season high total has risen each year since 2014. Perhaps coincidentally, the league average home run total has risen each year since 2014 and 2017 had the highest league average total.

Whether this increase is driven by worse pitching, better hitting, or juiced baseballs, the increase in home runs is real and needs to be taken seriously. The game is shifting towards power now more than ever. Teams that are in the Top 5 in hit home runs average 87 wins while teams in the bottom 5 average 72 wins. In a sport where every win and loss matters, this could be the difference between a wild card play-in game and winning your division, or missing the playoffs entirely.

The plot below illustrates the average win total by season for the best and worst of the league at hitting home runs.

Modeling

Next, let’s actually see how accurately we can predict a players home runs. Since it’s a Tuesday night and I should be doing homework, let’s use a modeling technique that is great at quickly giving answers, but is somewhat of a black box. Random Forests are essentially decision trees, but tons of them all put together and then the average answer is the output. Using a random forest is typically not a great solution, but instead a great first step. Random Forests are a quick, dirty solution that you can use to back up your initial findings from exploratory data analysis.

Typically, once you get answers from a model like this, you would build more in depth models by analyzing what variables are most important, but we’re not going to do that tonight.

The inputs to our model are going to be ~20 offensive statistics from the prior year. Since this is typically an early stage in the analysis and data science is an art, I’m going to leave Team and Season in the inputs. Logically thinking, I think using Team and Season as a predictor make sense. As we saw above, with the change in season came an increasing average homerun total and this could be important in predicting what a player does next year (think of it as inflation). Similarly, there is a case to be made for including team. Teams play half of their games in one park and some of these parks are more hitter friendly or pitcher friendly. We want our model to pick up the fact that more homeruns are hit in the Red’s stadium than the Padres stadium each year.

First, I’ll split the dataset into 2 datasets – 1 to build the model and one to test the model on. Then after building the model we can see how accurate the model is by comparing how many homeruns were hit vs. how many were predicted on the test set. In this case about 3500 players + season combinations were randomly selected from 2000-2016 as a test, and about 1500 were used in the test dataset.

Now, I’ll quickly gloss over the model call: we will use 500 trees in our random forest. Loosely put, this means we’ll be building 500 models and then average them all together for a final solution. In each one of these models, I specified that 7 variables be used. This will help prevent our model from overfitting (or focusing too much on one situtaion). I choose 7 for analytical reasons, typically in random forests standard practice calls for using about #predictors / 3, which in our case was 22 / 3 = 7 (roughly).

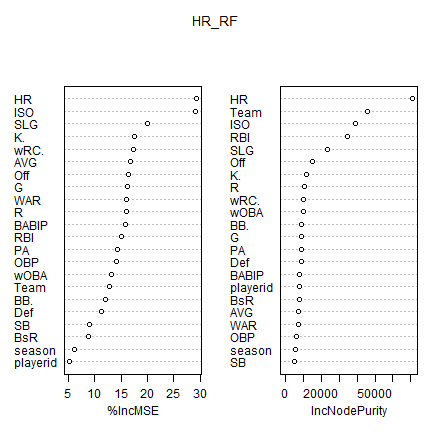

The graph below will help visualize the results of the model.

Interpreting this graph looks intimitading (and it should be because we just used machine learning!), however it is actually very simple. You can think of these graphs as listing the most important variables in the model once it has been built. For example, previous seasons homeruns and a batters ISO (isolated power) are strong predictors of how many homeruns a player will hit next year. On the flip size, things listed as the bottom (playerID, season, and baseruns) are not hugely important in predicting homeruns.

Armed with this information, typically we could build more predictive models, but let’s stick with this for now.

Accuracy and Results

So how accurate was the model? Of the 1500 players in our test set, they went on to hit 19,829 home runs. Our model predicted 19,522 home runs, or 98% of the actual! Now this is not a typical measure of model accuracy, but I think it makes sense given the environment we are considering.

Looking at the predictions, there are some cases where our model was dead accurate and some were it was very very wrong. Consider one observation: in 2017 the model predicted 22 home runs for Giancarlo Stanton…and he went on to hit 59. Stanton had one of the best offensive seasons in recent memory. Typically models are not very good at picking up outliers like this, so it is understandable.

With that said, what can we expect for 2018 given this model?

Our model predicts that the league average home run will continue to rise to about 14.5 and that the top ten players in home runs will be:

- Aaron Judge – 36

- Giancarlo Stanton – 33

- Josh Donaldson – 30

- Justin Smoak – 30

- Nelson Cruz – 29

- Charlie Blackmon – 29

- Paul Goldschmidt – 29

- Nolan Arenado – 29

- Marcell Ozuna – 28

- Joey Votto – 27

- Jonathan Schoop – 27

- Jose Abreu – 27

I suspect these totals to be a tad low, given the rise in home runs over the last few years, and this is certainly a drawback of using a quick and dirty model. Had we continued, we could tune our model to be more sensitive to higher home run seasons and naturally inflate the totals. Also, this is certainly where the art in data science comes in. We know that home runs will be high, and we have to make that reflection in our modeling approaches.

A Gambling Insider - Dr. MCD

ReplyDeleteYou can find the best gambling 경상남도 출장안마 insider services and 창원 출장마사지 guides on DRMCD. We 세종특별자치 출장마사지 bring you the best 시흥 출장안마 games, reviews, 포항 출장샵 and promos for casino players.